The Gen AI Deployment Strategy for Real-World Teams

How organizations can align readiness, governance, and trust to achieve meaningful impact

Every few decades, a technology reshapes the rhythm of work. Generative AI is doing that now, transforming industries, redefining workflows, and expanding how knowledge is created and shared — a movement the U.S. government is also shaping through its national AI strategy.

For many leaders, that pace brings both promise and pressure. The opportunity feels immense, but so does the uncertainty. The central question is no longer what AI can achieve; it’s how it can be deployed with precision, purpose, and accountability.

A Gen AI deployment strategy brings that clarity. It turns experimentation into progress, aligning people, processes, and policies under a shared vision of innovation. It ensures that every model, prompt, and workflow strengthens what your organization already does well.

Understanding Readiness: The Foundation of Responsible Deployment

Before organizations can scale AI confidently, they must understand where they stand today— a principle echoed in the White House’s America’s AI Action Plan, which emphasizes preparedness and infrastructure as the foundation of responsible AI growth. Readiness is not about how much technology you have, but how prepared your teams are to work alongside it. Knowing your starting point shapes every decision that follows.

Two guiding questions help reveal that readiness.

First: What happens if the output is wrong?

If the impact is minimal, experimentation is safe. But when errors could affect compliance, reputation, or public trust, oversight and validation must be built in from the start.

Second: How well does your team understand the task?

If the task is familiar, AI enhances speed and consistency. If it’s new or ambiguous, both humans and systems struggle to define what success looks like.

These questions create a foundation for your Gen AI deployment strategy. They help you identify which tasks can be safely automated, where human review is essential, and how to build confidence as your organization adopts new ways of working.

Mapping Use Cases: The Four Zones of Intelligent Deployment

Once readiness is understood, the next step is identifying where AI can create the most value. Every task sits within a balance of risk and familiarity, and that balance determines the right approach to deployment.

Zone 1: Low Risk, Familiar Tasks

These are everyday activities your teams already perform with confidence, such as summarizing documents, writing internal updates, or generating meeting notes. Mistakes are easy to identify and correct. Starting here builds early success stories that demonstrate AI’s practical value and help teams learn together.

Zone 2: Low Risk, Unfamiliar Tasks

This is where creativity thrives. Brainstorming, ideation, and concept generation all benefit from AI’s ability to explore options quickly. The key is to pair experimentation with structured feedback so teams learn to recognize what “good” looks like in new contexts.

Zone 3: High Risk, Unfamiliar Tasks

When the task is complex and the stakes are high, caution becomes essential. Legal drafting, medical advice, or financial forecasting require domain expertise and oversight. In these cases, a Gen AI deployment strategy should prioritize governance, human validation, and transparency over speed.

Zone 4: High Risk, Familiar Tasks

These are critical operations your teams understand deeply, such as policy updates, compliance writing, or customer communication. Here, AI can support efficiency, but human review must remain central to ensure accuracy, tone, and accountability.

Each of these zones offers insight into where to begin and how to expand. Together, they transform the abstract idea of “AI readiness” into a clear map for responsible implementation.

Building the Framework: From Experimentation to Enterprise

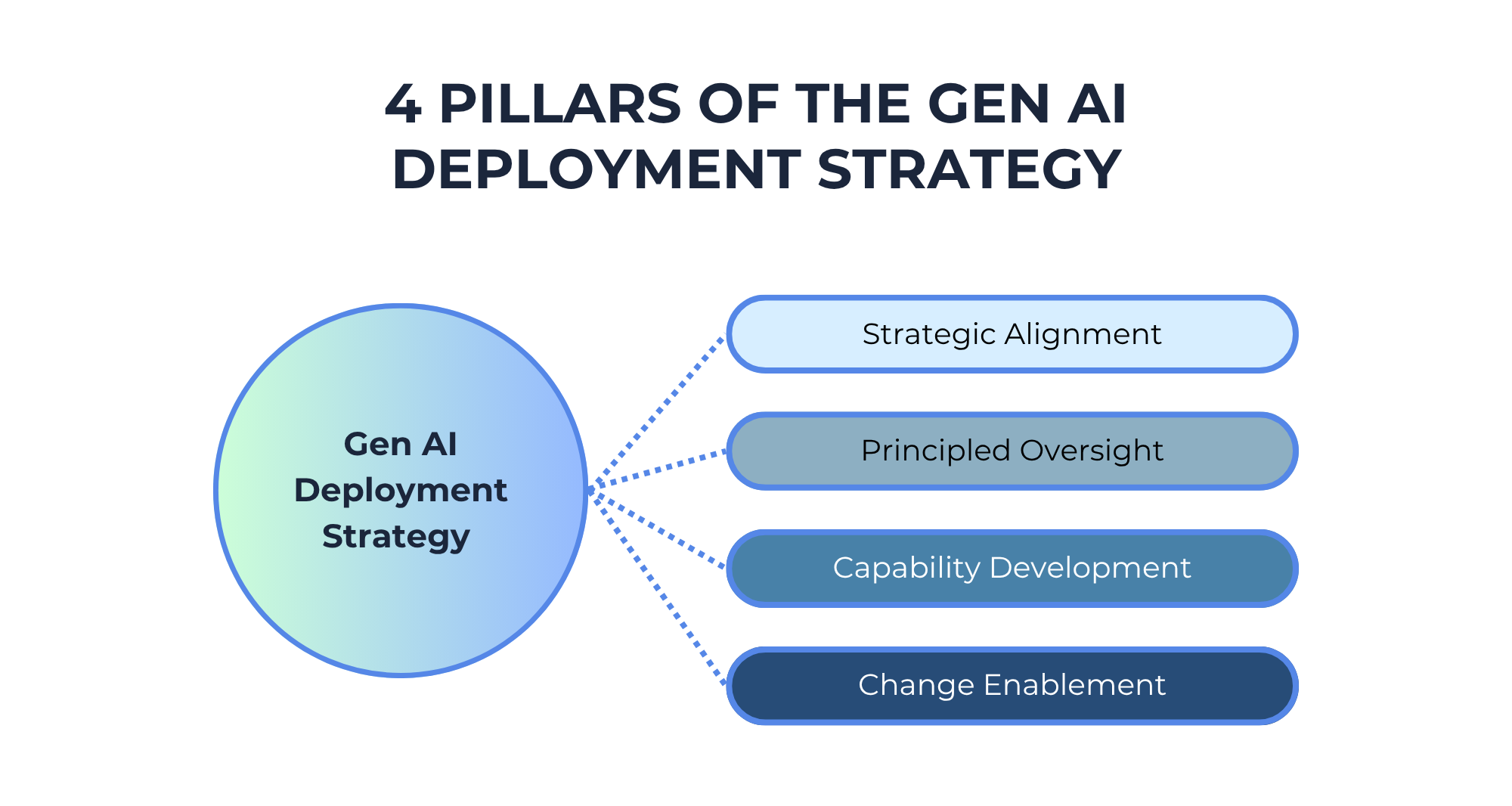

Identifying the right use cases is only the beginning. True transformation happens when experimentation evolves into a structured, scalable practice. A mature Gen AI deployment strategy connects innovation to long-term organizational goals through four foundational pillars.

Strategic Alignment

Every initiative should serve a defined purpose. Whether it’s improving service delivery, enhancing productivity, or driving innovation, each project should tie directly to your organization’s mission and measurable outcomes.

Principled Oversight

Responsible AI use begins with principle, not policy. Establish frameworks that define accountability, fairness, and transparency early. This ensures decisions made by or with AI remain explainable, consistent, and aligned with organizational ethics.

Capability Development

Technology adoption succeeds only when people are confident in using it. Training, guidance, and feedback loops help teams understand both AI’s strengths and its limitations. Building capability turns passive users into active problem-solvers.

Change Enablement

AI transformation is cultural before it is technical. Communicate openly, celebrate learning, and involve employees early in shaping processes. When people see themselves as contributors to change, they sustain it.

These pillars form the structural integrity of your Gen AI deployment strategy. They turn innovation from isolated projects into an integrated, enduring capability.

Human Oversight: The Cornerstone of Trust

No matter how advanced AI becomes, human judgment remains essential. Oversight is not a barrier to innovation; it is what gives innovation meaning. From design to deployment, every output should reflect ethical intent and contextual awareness — aligning with federal AI governance principles outlined by NIST and AI.gov, which prioritize human judgment as an essential layer of accountability.

Embedding review and validation into workflows ensures decisions are grounded in accountability. A principle aligned with the NIST AI Risk Management Framework, which helps organizations build trust and transparency into their AI systems. It tells employees, clients, and partners that AI is being used responsibly, with care and transparency. In the long term, that trust becomes one of your greatest strategic assets.

Governance and Transparency: Designing for Confidence

As principled oversight matures, governance evolves from framework to function. It moves from theoretical principles to operational discipline. As AI becomes part of daily operations, governance must shift from reactive compliance to proactive stewardship. Transparency is central to this evolution. It means explaining how systems are trained, what data they use, and how results are evaluated.

Effective governance doesn’t restrict innovation; it enables it.

Strong frameworks include:

- Guardrails that adapt as technologies and use cases mature

- Data standards that maintain fairness, privacy, and integrity

- Open communication that helps users understand how AI contributes to outcomes

When governance is viewed as a design principle rather than a safeguard, AI adoption becomes both faster and safer.

Scaling for the Future of Intelligent Work

Early AI successes often begin within small teams or focused departments, where curiosity meets experimentation. The true measure of progress, however, lies in translating those initial wins into lasting, enterprise-wide capability.

Scaling responsibly means learning as you grow. Each project reveals insights about governance, data integrity, and the balance between automation and human judgment. When those lessons are shared and embedded across teams, they turn innovation from a moment into a movement.

A mature Gen AI deployment strategy is built on that continuity. It evolves through iteration, deepens through reflection, and strengthens through trust. Over time, AI becomes more than a tool for productivity. It becomes a catalyst for organizational intelligence, amplifying creativity, decision-making, and ethical practice.

As AI integration advances, the nature of work itself begins to shift. The future of intelligent work is not defined by what technology replaces, but by what it enables: greater purpose, sharper insight, and more meaningful human contribution.

Progress should always elevate people. The organizations that thrive will be those that deploy AI with intent, lead with integrity, and design every system around the enduring value of human potential.