AI Policy: The Strategic Framework Every Organization Needs Right Now

79% of business professionals have encountered generative AI. 22% use it regularly at work. Yet 58% of organizations deploying AI lack formal protocols for security, responsible usage, or ethical safeguards.

This lack of governance leaves organizations open to growing risks. Employees try out new tools without leadership’s knowledge. Customer data may pass through unreviewed platforms. Compliance requirements are sometimes overlooked. When problems like security breaches, bias, or regulatory issues happen, companies often realize too late that missing infrastructure was the main cause.

Creating an AI policy helps manage these risks before they become bigger problems. A clear framework guides how your organization adopts, uses, and oversees AI technologies. It also protects data, supports compliance, and encourages innovation.

What Is an AI Policy?

An AI policy is a documented framework that guides your organization’s use of artificial intelligence technologies. It defines which tools are approved, what data can be processed, how outputs should be validated, and who holds accountability for AI-driven decisions.

It serves multiple functions: clarifying expectations for employees, establishing governance structures, creating evaluation protocols for new tools, and setting boundaries that protect sensitive information while enabling productive use.

To be effective, the policy must address several core components:

- Scope and Applicability: Who needs to follow the policy, such as employees, contractors, vendors, and partners, and which AI systems are included.

- Approved Tools and Access: Which applications have been reviewed and approved, and how employees gain access.

- Usage Guidelines: What data can be shared, when human review is required, and how outputs should be verified.

- Governance Structures: Who enforces the policy, investigates violations, and evaluates new tools.

- Compliance Requirements: Which laws and regulations apply across industries and jurisdictions.

- Training Protocols: How employees learn to apply the policy in daily work.

- Review Cycles: How the policy evolves as technology and regulations change.

An AI policy translates organizational values and risk tolerance into operational clarity. It empowers employees to innovate confidently while protecting the organization from preventable risks.

Why You Need an AI Policy

Organizations that use AI without formal governance face risks sooner than they might expect. These risks include issues with compliance, data security, operational integrity, and reputation.

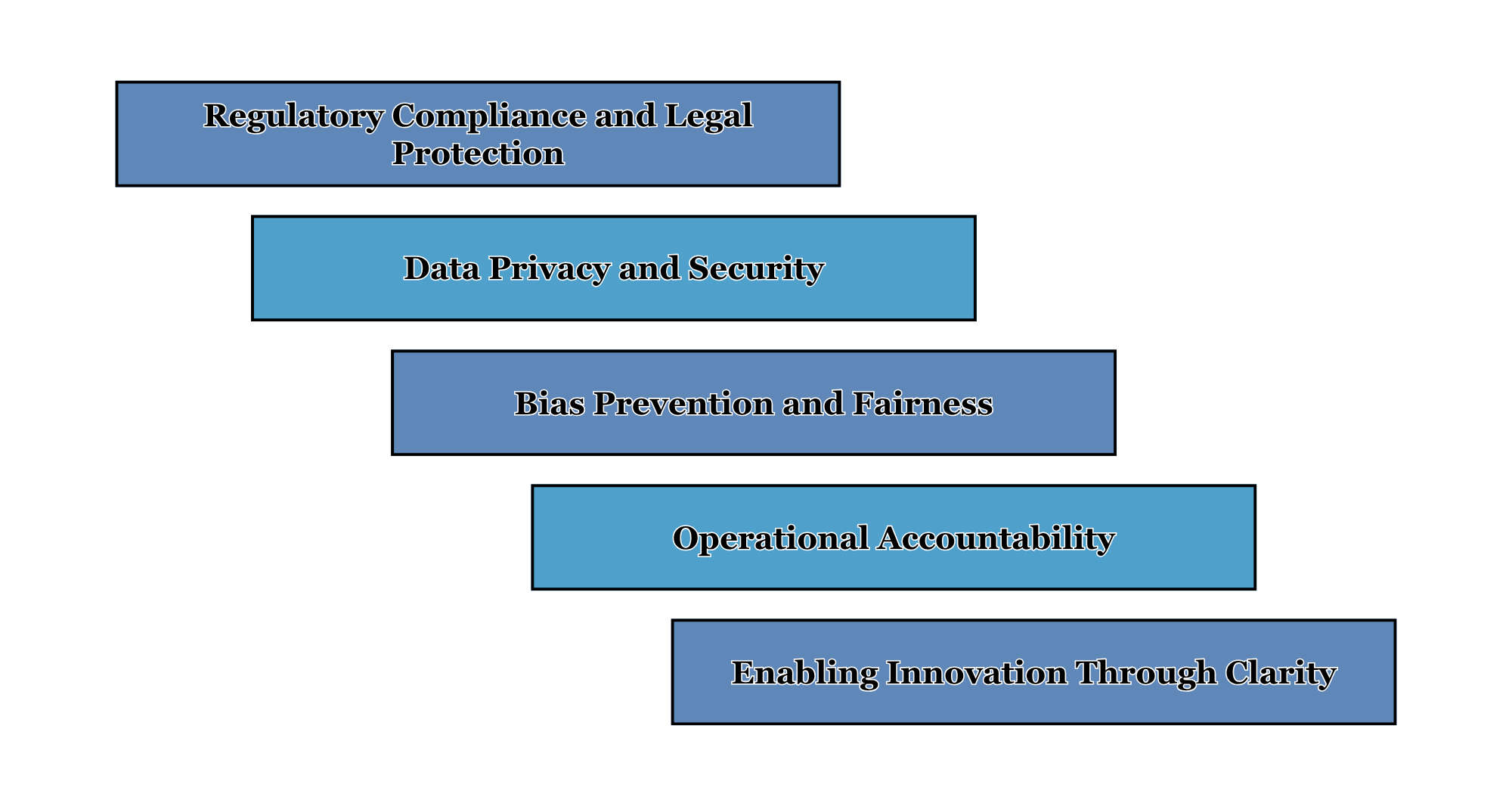

Regulatory Compliance and Legal Protection

AI regulations in the U.S. are expanding rapidly. Financial services must comply with fair lending and algorithmic decision-making rules. Healthcare providers face HIPAA obligations. EEOC guidance addresses AI-driven hiring and performance evaluation. State-level laws, such as the California Consumer Privacy Act, add additional requirements for consumer data handling.

Internationally, frameworks like the EU’s AI Act affect U.S. companies with global operations or European customers.

Without a documented policy, organizations struggle to demonstrate compliance during audits. They lack visibility into which systems are in use, making it difficult to assess whether deployments meet regulatory standards. A formal policy provides the infrastructure to track AI usage, document decisions, and show regulators that governance is in place.

Data Privacy and Security

Employees may not realize that information entered into public AI tools can become part of those systems’ training data. If customer details, financials, or strategic plans are submitted to unapproved platforms, this information could be at risk.

Organizations have already experienced costly breaches from well-intentioned misuse. Without clear policies specifying approved tools and data boundaries, they rely on inconsistent judgment calls. An AI policy creates explicit rules around data handling, protecting assets while enabling productive use.

Bias Prevention and Fairness

AI systems trained on historical data can replicate the biases embedded in that data. Hiring algorithms may favor overrepresented demographics. Lending models may disadvantage underserved groups. These biases scale quickly, turning isolated issues into systemic ones.

A formal policy embeds review mechanisms to evaluate AI systems for bias before deployment and monitor them during operation. Fairness becomes standard practice, not an afterthought.

Operational Accountability

When AI systems make flawed recommendations, accountability becomes murky. Was it the developer? The deployment team? The executive who approved the budget?

An AI policy clarifies roles throughout development, deployment, and use. It defines who makes adoption decisions, who monitors performance, and who responds to issues. This clarity helps organizations resolve problems quickly and keep improving.

Enabling Innovation Through Clarity

When people do not know what is allowed, they often hesitate. Employees who are not sure if using AI is safe or approved might avoid it, even if it could help the organization.

Clear policies remove that uncertainty. When employees know which tools are approved, what uses are encouraged, and how to handle edge cases, they adopt AI confidently. The framework provides permission to experiment within safe boundaries.

Building an Effective AI Policy Framework

To create a policy that truly serves your organization, think carefully about its goals, who it will impact, and how it can change as your needs evolve.

Define Context and Objectives

Start by identifying the business problems AI should solve. Where do manual processes create bottlenecks? What decisions would benefit from scalable data analysis? Which customer interactions could be enhanced through automation?

Next, clearly state the essential values, such as transparency, fairness, privacy, and human oversight. These values guide your policy and help address unusual situations.

Finally, consider the acceptable level of risk for each use case. For example, handling scheduling data is less risky than making hiring recommendations, and marketing content is different from customer service. Oversight should fit the level of risk, rather than being the same for every situation.

Establish Boundaries and Guidelines

Effective policies specify what’s covered and what’s not. Define who must comply, including remote workers, contractors, and vendors. Clarify department-specific requirements based on sensitivity.

List approved tools and access procedures. Provide alternatives when needed. Detail acceptable uses, prohibited actions, and validation standards. Visual aids, such as decision trees, help employees apply the policy in real-world scenarios.

Designing Governance That Scales

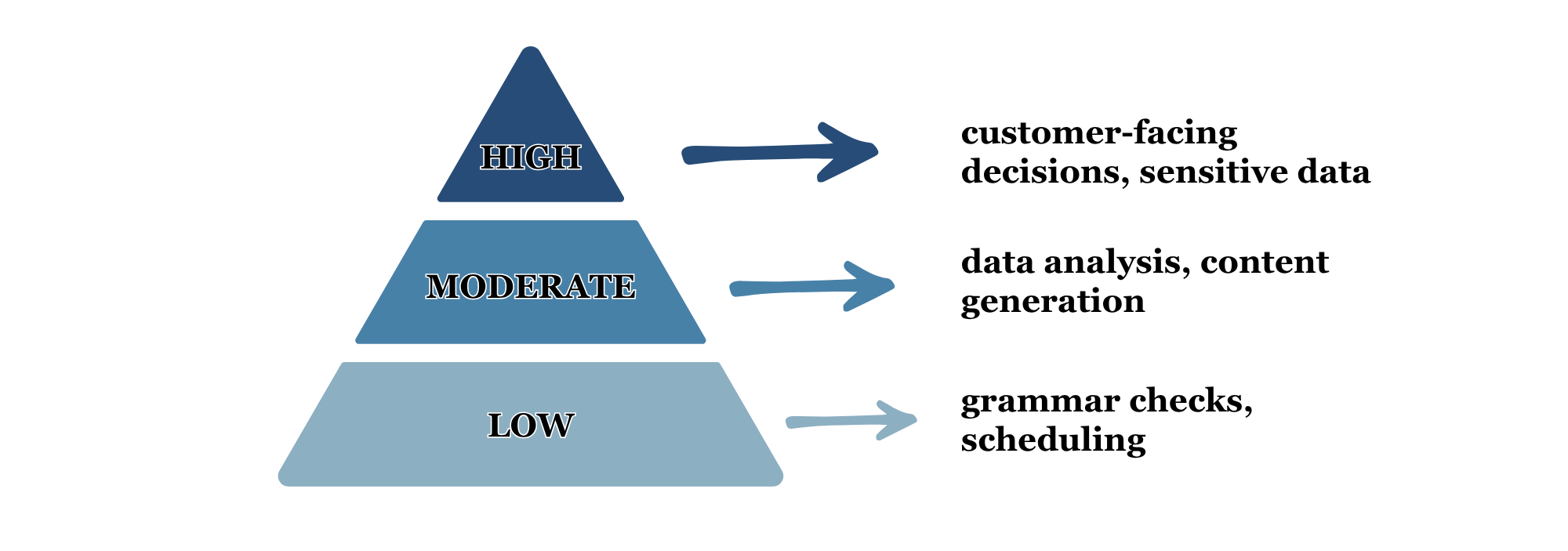

Governance should match the level of risk and not slow things down. Try using a tiered approach:

- Low-risk: grammar checks, scheduling

- Moderate-risk: data analysis, content generation

- High-risk: customer-facing decisions, sensitive data

Assign people to handle tool evaluation, monitor performance, respond to violations, and update policies. Set up clear ways for questions and concerns to be raised. Build monitoring tools like usage analytics, audits, and employee feedback to help improve the framework over time.

Training That Drives Adoption

Even the best policy fails without effective training. Make onboarding practical: show new hires which tools they can use, how to request access, and how to handle common scenarios.

Tailor training by role. Use realistic examples drawn from actual work. Reinforce learning as tools and risks evolve. Designate AI champions within departments to answer questions and flag issues early.

Measure training effectiveness through assessments, usage patterns, and incident tracking. Use insights to strengthen weak areas.

Continuous Improvement Mechanisms

AI evolves rapidly. Static policies don’t hold. Establish quarterly review cycles. Reassess tools, usage patterns, and emerging risks. Monitor regulatory developments and adjust proactively.

Set up feedback channels so employees can report challenges or unexpected results. When violations occur, investigate them carefully. Was the policy clear? Was the training enough? Use what you learn from these incidents to make the framework better.

Document changes carefully. Communicate updates clearly. Ensure everyone operates in accordance with the current guidelines.

Implementing Your AI Policy Successfully

Even strong policies can fail if rollout is mishandled. Implementation requires attention to change management and practical adoption barriers.

Engage Stakeholders Early

Bring in IT, legal, department leaders, and frontline staff. Getting input from different teams helps balance protection with practicality and encourages everyone to support the policy.

Start With Pilot Programs

Test your framework in one department or use case. Gather feedback. Identify friction points. Refine before scaling. Early adopters help shape the final rollout and build momentum.

Address Adoption Barriers

Make the policy easy to use with quick guides, decision trees, and built-in prompts. Add guidance to daily workflows and offer real-time support so employees feel confident using the policy.

Measure What Matters

Track metrics:

- Policy usage

- Common questions

- Violation patterns

- Approval timelines

- Employee sentiment

Use data to optimize. Remove friction. Strengthen safeguards. Let evidence guide refinement.

Communicate Purpose Clearly

Help employees see why governance is important, not just which rules to follow. Share stories about risks that were avoided. Show how the policy supports company values and goals. Present governance as a foundation for innovation, not just extra paperwork.

Moving Forward

Organizations face a choice: build governance proactively or scramble reactively after incidents occur.

The reactive path costs more. It means retrofitting oversight onto entrenched behaviors, overcoming resistance, and cleaning up preventable damage.

Organizations that act early gain compounding advantages. They shape adoption patterns, prevent technical debt, and build cultures where responsible AI use is the norm.

Yes, the investment is substantial. But the returns are clear: reduced risk, accelerated innovation, and stronger competitive positioning.

Your AI policy is more than a compliance requirement. It is a key part of your strategy that will decide if AI gives you an advantage or creates new risks.

The time to build that infrastructure is now.